AI Breakthrough: New AI Tool Creates Hyper-Realistic Faces From Pixelated Images

Artificial intelligence (AI) has been rapidly evolving into a very mature technology that is altering the world we live in. With the advancements in modern computing technologies come an explosion of major AI breakthroughs which have significantly shaped industries and simplified the way of life.

One of the latest major AI breakthroughs is the AI tool developed by a team of researchers from Duke University in North Carolina. Dubbed, ‘Photo Upsampling via Latent Space Exploration (PULSE),’ the tool transforms blurry, unidentifiable faces into hyper-realistic computer-generated portraits.

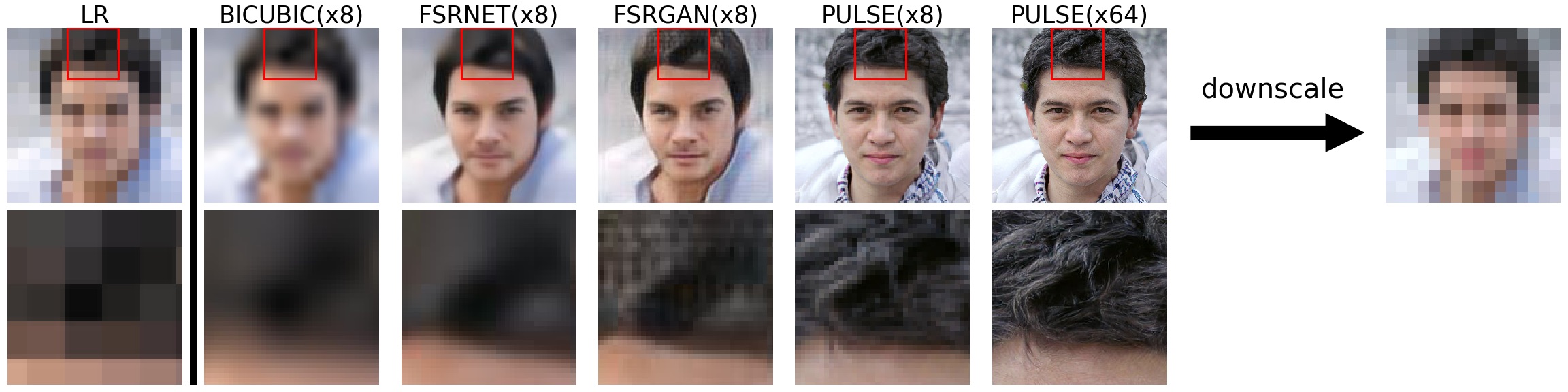

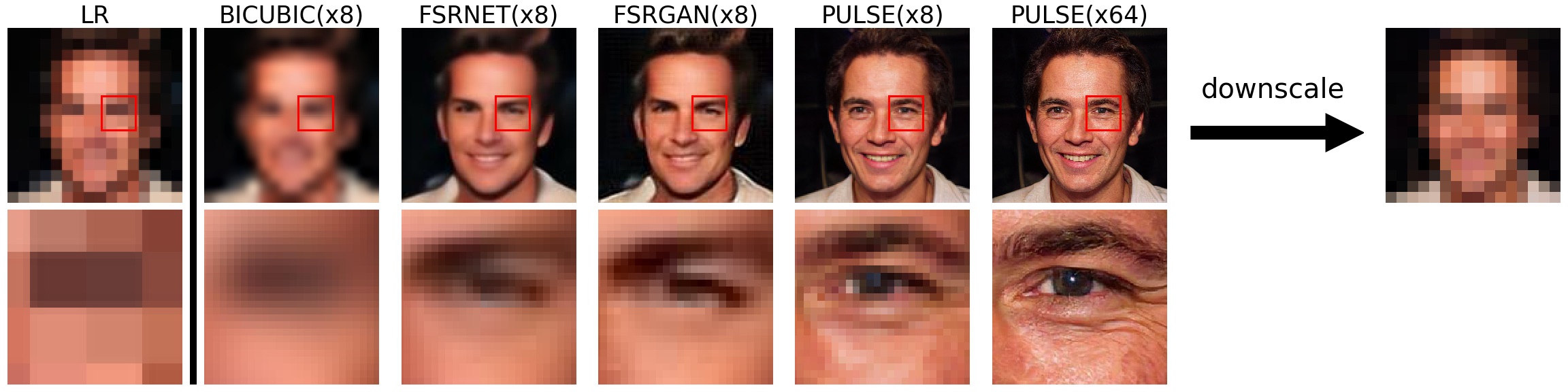

PULSE vs. Previous Technologies

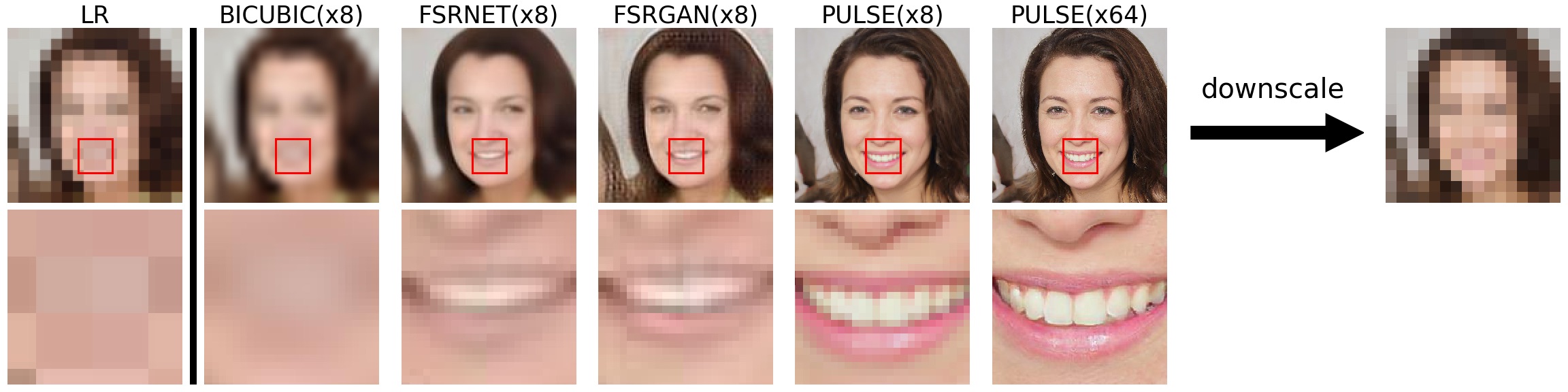

PULSE compared to other scaling methods. Screenshots from pulse.cs.duke.edu

PULSE compared to other scaling methods. Screenshots from pulse.cs.duke.edu

In the past, images can only be scaled up to eight times its original resolution. Using PULSE, researchers were able to scale pixelated images—often without the recognizable features such as eyes, nose and lips—up to 64 times its original resolution. From scratch, PULSE creates facial features as detailed as fine lines, eyelashes and even stubble.

“Never have super-resolution images been created at this resolution before with this much detail,” said Duke computer scientist Cynthia Rudin, the research leader.

Doppelganger Generator

To be clear, the tool is not used to identify the real people behind the images. Rather, it generates new faces that don’t exist, but look plausibly, and eerily real. According to Rudin, each time a single blurred image of a face is scaled, it splits a number of lifelike replicas, each of which looks slightly different.

How It Works

While traditional approaches take a low-resolution image and ‘guess’ what extra pixels are needed by trying to get them to match, PULSE works the other way by first generating examples of high-resolution faces, searching for ones that closely matches the shrunk-down version of the input.

The team utilized generative adversarial network (GAN), a machine learning mechanism composed of two neural networks that compete to come up with the most realistic product. Within a few seconds, the system can transform a 16×16-pixel image of a face to 1024 x 1024 pixels, increasing the pixels exponentially until it reaches an HD resolution.

While traditional method generates results that don’t fit with all of the pixels, producing textured areas and fuzzy patches in the image particularly on hair and skin, PULSE creates computer-generated versions which are crisper and clearer in details: from pores, to wrinkles, and wisps of hair that are unnoticeable in the low-resolution photos.

Application

For research co-author Sachit Menon who has a double-major in mathematics and computer science, while the research focused on faces as a proof of concept, the same technique could in theory create almost realistic-looking sharp pictures of any low-resolution images. PULSE’s vast potential applications range from medicine and microscopy to astronomy and satellite imagery.

It is further bolstered as the team recently presented their method at the 2020 Conference on Computer Vision and Pattern Recognition (CVPR), the premiere event for technology professionals and visionaries in computing technology, machine learning, and artificial intelligence — the sectors behind self-driving cars, facial recognition, social media apps, and other technological innovations.